Amplifying Creativity: Building an AI-Powered Content Creation Assistant — Part 1

Welcome to the Journey of Creating a Content Creation Assistant!

The demand for fresh, relevant, and visually engaging content has remained unchanged. Yet, for many content creators, managing the sheer volume and variety of content required across different platforms can be overwhelming. Enter the Content Creation Assistant — an innovative solution to streamline content generation.

This series of insightful blog posts, takes you through the intricate process of developing a content creation assistant that leverages state-of-the-art AI models and APIs. Imagine a world where blog posts, social media updates, and captivating images are generated effortlessly, thanks to cutting-edge technology. Part 1 will unveil how the harmonious blend of LlamaIndex Framework, Tavily Search API, OpenAI GPT, and DALL-E 3 culminates in a seamless content creation experience.

Series:

- Amplifying Creativity: Building an AI-Powered Content Creation Assistant — Part 1

- Amplifying Creativity: Building an AI-Powered Content Creation Assistant — Part 2

What to Expect

In this post, we’ll delve deep into the essence of the Content Creation Assistant, exploring its core components and functionalities:

- Blog Post Generation: Discover how advanced language models and real-time data integration come together to craft informative and engaging articles.

- Social Media Post Creation: Learn how to automate compelling social media content tailored to boost engagement across various platforms.

- Image Generation: See the magic of DALL-E 3 in action as it creates unique images that complement textual content and enhance visual storytelling.

By the end of this post, you will not only understand the technical intricacies of building the assistant but also appreciate the broader implications of AI in content creation. Join us as we navigate the cutting edge of automated content generation, unveiling the potential to transform how content is conceived, created, and consumed.

You can find the source code for this project here: Content Creation Assistant AI

Understanding the technologies used

In the first part of this series, we are building a prototype using:

- Jupyter Notebook

- LlamaIndex

- OpenAI GPT and Dall-E 3 Models

Jupyter Notebook is a free and open-source web application that enables users to create and share interactive documents containing live code, visualizations, narrative text, and markdown. It’s great for experimenting and iteration.

LlamaIndex is an innovative framework designed to significantly enhance the capabilities of Large Language Models (LLMs). This framework serves as a vital connection between structured data and language models, facilitating the development of more intelligent and responsive applications. By organizing and optimizing data in a way that highlights the strengths of LLMs, LlamaIndex enables these models to perform more effectively, driving improved outcomes in various tasks such as natural language understanding, information retrieval, and conversational AI.

This year, LlamaIndex introduced workflows, which will be the focus of this article. Workflows are an event-driven, step-based way to control application execution flows. Before we dive into the content creation workflow, it is important to understand key concepts in LlamaIndex Workflows: Event,Step, and Context:

Step: serve as the essential building blocks of a workflow. Each step is a Python function designed to execute a specific task, including retrieving context from various sources, acquiring responses from a language model, or processing data. These steps can interact with one another by receiving and emitting events. Furthermore, they can access a shared context, facilitating effective state management across various steps within the workflow.Event: In a workflow, events are key data carriers and flow controllers. Designed as Pydantic objects, they enable data validation and settings management in Python applications. Events facilitate information transfer and guide the workflow’s progression, allowing users to customize attributes for a tailored experience. Among the event types, two are notable:StartEventandStopEvent. TheStartEventsignals the beginning of the workflow, while theStopEventmarks its conclusion. Together, these events provide clear entry and exit points.Context: You can pass data directly between events, but this has limitations. If you need to pass data between steps that are not directly connected, you must pass data through all the steps in between. Resulting in code that is harder to read and maintain. TheContextobject is available at every step in a workflow. Allowing you to maintain state throughout the workflow while keeping the code concise and easier to maintain.

OpenAI’s GPT (Generative Pre-trained Transformer) is a leading language model recognized for generating coherent and contextually relevant text. By leveraging extensive datasets and advanced machine learning techniques, GPT effectively understands and replicates the nuances of human language. This skill enables it to perform various tasks, such as drafting articles, creating dialogue, and summarizing information, thereby serving as a valuable asset for content creation and communication across formats, including blogs and social media.

Similarly, DALL-E 3, another innovation from OpenAI, generates unique and visually striking images from textual descriptions. By comprehensively understanding language semantics, DALL-E 3 translates descriptive text into high-quality visuals, offering a novel means of visualizing concepts. This capability is particularly beneficial in creative fields where original imagery is essential, enhancing storytelling by providing a rich, integrated experience of both text and visuals.

Designing the Content Creation Workflow

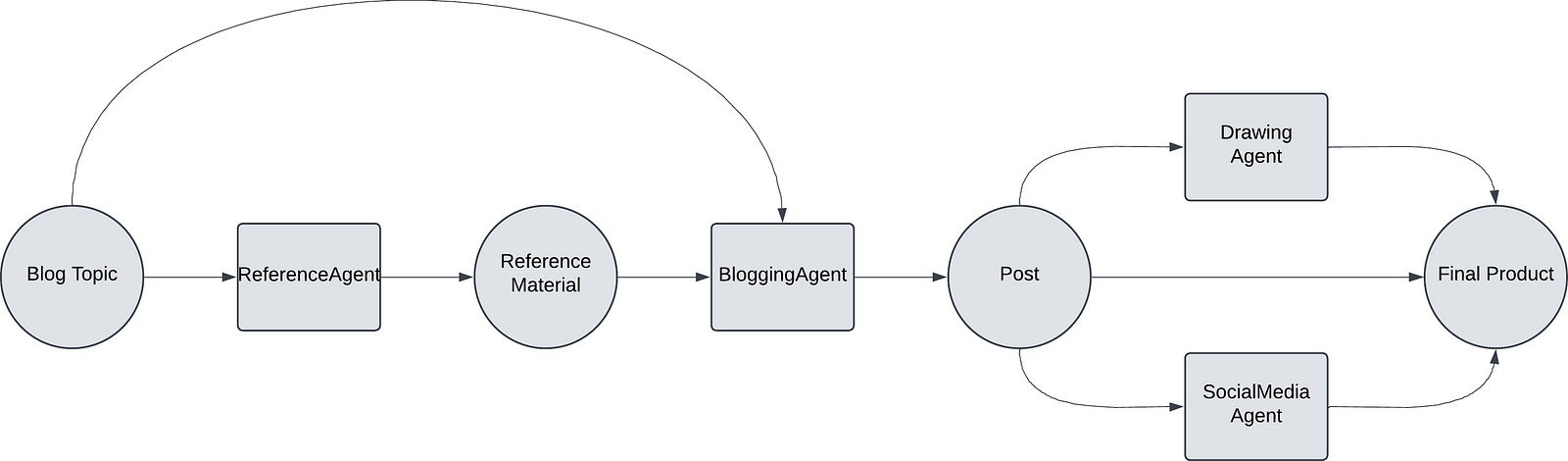

The workflow consists of four agents:

- ReferenceAgent: The reference agent is an optional flow that augments the LLM with updated information. It uses the Tavily search API, a specialized search engine designed for large language models (LLMs) and AI agents.

- BloggingAgent: The blogging agent is tasked with writing the blog post.

- SocialMediaAgent: The social media agent is tasked with writing a LinkedIn post. In future iterations, we will make this more dynamic by including other platforms, extending the workflow, and using a more agentic approach.

- DrawingAgent: The drawing agent uses Dall-E-3 to generate an image based on a blog post summary.

To get started with creating a workflow, you need to define the EventTypes:

class ResearchEvent(Event):

query: str

uuid: str

class BlogEvent(Event):

query: str

research: str

uuid: str

class BlogWithoutResearch(Event):

query: str

uuid: str

class SocialMediaEvent(Event):

blog: str

uuid: str

class SocialMediaCompleteEvent(Event):

result: str

class IllustratorEvent(Event):

blog: str

class IllustratorCompleteEvent(Event):

result: strOnce we have our events defined, we can create a Workflow class. Before doing so, let's review how the workflow is initiated to have clarity of the optional flow mentioned above:

w = ContentCreationWorkflow(timeout=120, verbose=False)

result = await w.run(query="Albert Einstein", research=True)

print(result)First, we create an instance of the workflow, which we define next. We use the workflow run() method and pass in variables defined that the start event expects.

Next, let’s break down the ContentCreation Workflow:

class ContentCreationWorkflow(Workflow):

@step

async def start(self, ctx: Context, ev: StartEvent) -> ResearchEvent | BlogWithoutResearch :

print("Starting content creation", ev.query)

id = str(uuid.uuid4())

if (ev.research) is False:

return BlogWithoutResearch(query=ev.query, uuid=id)

return ResearchEvent(query=ev.query, uuid=id)

@step

async def step_research(self, ctx: Context, ev: ResearchEvent) -> BlogEvent:

print("Researching users query")

search_input = TavilySearchInput(

query=ev.query,

max_results=3,

search_depth="basic")

research = tavily_search(search_input)

return BlogEvent(query=ev.query, research=research, uuid=ev.uuid)

@step

async def step_blog_without_research(self, ctx: Context, ev: BlogWithoutResearch) -> SocialMediaEvent | IllustratorEvent:

print("Writing blog post without research")

print("uuid", ev.uuid)

llm = OpenAI(model="gpt-4o-mini", api_key=openai_api_key)

prompt = blog_template.format(query_str=ev.query)

result = await llm.acomplete(prompt, formatted=True)

save_file(result.text, ev.uuid)

print(result)

ctx.send_event(SocialMediaEvent(blog=result.text, uuid=ev.uuid))

ctx.send_event(IllustratorEvent(blog=result.text, uuid=ev.uuid))

@step

async def step_blog(self, ctx: Context, ev: BlogEvent) -> SocialMediaEvent | IllustratorEvent:

print("Writing blog post")

llm = OpenAI(model="gpt-4o-mini", api_key=openai_api_key)

prompt = blog_and_research_template.format(query_str=ev.query, research=ev.research)

result = await llm.acomplete(prompt, formatted=True)

save_file(result.text, ev.uuid)

ctx.send_event(SocialMediaEvent(blog=result.text, uuid=ev.uuid))

ctx.send_event(IllustratorEvent(blog=result.text, uuid=ev.uuid))

@step

async def step_social_media(self, ctx: Context, ev: SocialMediaEvent) -> SocialMediaCompleteEvent:

print("Writing social media post")

llm = OpenAI(model="gpt-4o-mini", api_key=openai_api_key)

prompt = linked_in_template.format(blog_content=ev.blog)

results = await llm.acomplete(prompt, formatted=True)

save_file(results.text, ev.uuid, type="LinkedIn")

return SocialMediaCompleteEvent(result="LinkedIn post written")

@step

async def step_illustrator(self, ctx: Context, ev:IllustratorEvent) -> IllustratorCompleteEvent:

print("Generating image")

llm = OpenAI(model="gpt-4o-mini", api_key=openai_api_key)

image_prompt_instruction_generator = image_prompt_instructions.format(blog_post=ev.blog)

image_prompt = await llm.acomplete(image_prompt_instruction_generator, formatted=True)

client = dalle3(api_key=openai_api_key)

response = await generate_image_with_retries(client, image_prompt.text)

image_url = response.data[0].url

response = requests.get(image_url)

image = Image.open(BytesIO(response.content))

directory = f'./{ev.uuid}'

os.makedirs(directory, exist_ok=True)

image.save(f'{directory}/generated_image.png')

image.save(f'{ev.uuid}/generated_image.png')

return IllustratorCompleteEvent(result="Images drawn")

@step

async def step_collection(self, ctx: Context, ev: SocialMediaCompleteEvent | IllustratorCompleteEvent) -> StopEvent:

if (

ctx.collect_events(

ev,

[SocialMediaCompleteEvent, IllustratorCompleteEvent]

) is None

) : return None

return StopEvent(result="Done")- We create a class that extends the base Workflow class provided by LlamaIndex.core.workflow module

- Define a start step that accepts a

ContextandStartEventparameters. TheStartEventcontains the query and research parameter passed to therun()method. It uses the research parameter to determine if we want to augment the blog with updated information using Tavily. We later save the outputs from the blogging, social media, and image generation steps using a unique identifier in a new directory local to the project. - If the research parameter is set to True, we enter the research step. In this step, we take the topic of the blog post passed in and use the TavilySearch API to gather relevant information to be fed later into the LLM context. After the results are returned, we publish a BlogEvent with the query and research attached.

- The blog post step initializes an OpenAI client (model) using GPT-4o-mini. I created predefined prompt templates that contain instructions for the blog writing agent. The instructions are appended with the research results before being passed to OpenAI. The model then returns a blog post written based on the topic and then saves the file to your local directory, and publishes a

SocailMediaEventandIllustratorventto start a parallel execution. - Like before, custom prompt instructions for the social media agent are augmented with the blog post before being passed into a model. The model returns results for a LinkedIn post (will be extended later), saves the results to the same directory as before using the UUID, and publishes a

SocailMediaCompleteEvent. - At the same time, the illustrator event begins. This one is unique because Dall-E 3 has a smaller context window, so it can’t accept the full blog post. To workaround this limitation, we initialize another agent with custom instructions to summarize the blog post into prompt instructions for an image generation model. The custom prompt is returned and fed into Dall-E 3 to create the image. The image is saved in the same local directory as the blog and LinkedIn post.

- The collection step is a placeholder for future enhancements. It highlights collecting multiple events for later processing after running a parallel flow.

- Note: The step blog without research follows the same logical flow but is not augmented by Tavily search results. It writes a blog post and publishes events so the social media and illustrator agent can perform their tasks.

See the source code linked above to review the prompt instructions and helper functions used throughout the workflow.

Thank you for reading! In part two of this series, I will expand this notebook into a full-fledged server using FastAPI. See part 2 here: https://www.markellrichards.com/amplifying-creativity-building-an-ai-powered-content-creation-assistant-part-2

Check out my GitHub for the complete implementation. I look forward to any feedback and discussions around AI/ML. I am currently a Software Architect at Groups360. Feel free to connect with me on LinkedIn.

References

LlamaIndex — follow them to stay up to date with the latest of this great framework!

Index – Agentic rag with llamaindex and vertexai managed indexdocs.llamaindex.ai

Workflows - LlamaIndex – A Workflow in LlamaIndex is an event-driven abstraction used to chain together several events. Workflows are made up of…docs.llamaindex.ai

Getting Started with Tavily Search | Tavily AIThe Python SDK allows for easy interaction with the Tavily API, offering the full range of our search functionality…docs.tavily.com